BiziTopics

Email Optimization Playbook: How To Test, Tweak And Win More Opens & Clicks

BiziBusiness

Aug 12, 2025

23 min read

You’ve spent time crafting your email. The subject line is clever, the design looks sharp, the offer is strong. You hit “send”… and then wait.

Maybe it performs well. Maybe it doesn’t. But do you actually know why?

Most marketers rely too heavily on instinct, past experience, or “what worked last time.” But inboxes are crowded, audiences evolve, and best guesses aren’t enough anymore. That’s where email testing and optimization come in.

Smart testing helps you make decisions based on data—not gut feelings. And when done right, optimization isn’t just about minor lifts in open rates. It can unlock double-digit improvements in clicks, conversions, and even revenue per subscriber.

This guide is your playbook for turning uncertainty into insight—and insight into growth. You’ll learn what to test, how to test it, and how to turn your results into better, faster, more profitable campaigns.

Ready to build email campaigns that perform on purpose—not by accident?

What Is Email Optimization—And Why It Matters

Email optimization is the ongoing process of improving your emails through intentional testing, analysis, and refinement. It’s not just about making your emails look better or sound clever—it’s about boosting performance in measurable, repeatable ways.

In practice, it means refining subject lines to increase open rates, testing different layouts to improve click-throughs, and fine-tuning calls-to-action (CTAs) to drive more conversions. It’s how you take the guesswork out of email marketing and turn every send into a smarter version of the last.

Optimization vs. Experimentation: Know the Difference

Many marketers confuse experimentation with optimization. The difference is simple but important:

- Experimentation is about trying something new.

- Optimization is about trying something new with purpose and structure.

For example:

- “Let’s change the CTA color to see if more people click.” → Experiment.

- “We’ll test red vs. green CTAs with 50/50 traffic, measuring click-through rate, and declare a winner after 48 hours.” → Optimization.

Optimization always includes:

- A clear hypothesis

- A controlled test environment

- Defined success analytics and metrics

- Actionable insights that inform future campaigns

The goal isn’t just to find what works but to understand why it works and to scale it across your entire email strategy.

Why It Matters: Small Wins, Big Impact

In email marketing, small performance gains compound over time. A modest 3% increase in open rates or a 5% lift in click-throughs—when multiplied across dozens of campaigns and thousands of contacts—can generate significant additional revenue.

For example:

- A list of 50,000 subscribers with a 2% CTR improvement might drive 1,000+ extra clicks per campaign

- If your average conversion rate is 10%, that’s 100 more conversions—with no extra ad spend

And unlike ad channels, email optimization improves an owned asset—your list building. You’re not paying more to get better results. You’re simply getting smarter.

Optimization Helps You Evolve With Your Audience

Markets shift. People change. What worked last quarter may fall flat tomorrow. Optimization ensures your strategy and planning keeps pace with your audience’s behavior, preferences, and expectations.

It helps you:

- Identify fatigue before performance crashes

- Understand what resonates across segments or buyer stages

- Adapt faster to shifts in device usage, inbox placement, or engagement trends

In short, email optimization turns your campaign data into a growth engine. It’s how you build emails that not only look good but consistently perform.

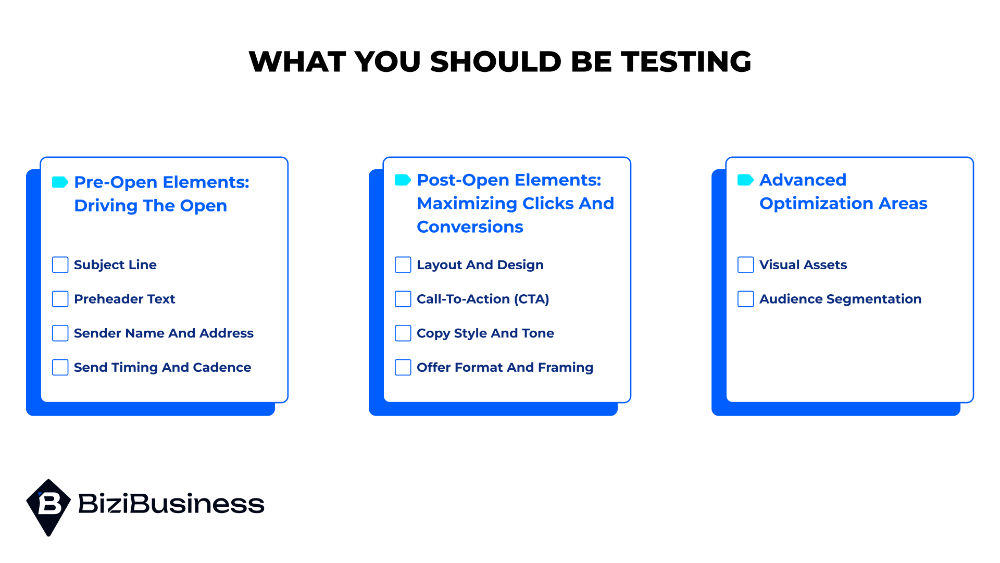

What You Should Be Testing

Optimization starts with clarity. To improve your email results, you need to know what elements directly influence performance—and how to test them methodically. Every part of an email plays a role in the user journey, from inbox to click-through to conversion.

Below, we break testing opportunities into two stages: pre-open and post-open. Each stage offers multiple high-impact variables that can unlock major performance gains when optimized intentionally.

Pre-Open Elements: Driving the Open

These are the factors that determine whether your email even gets opened. No matter how great your content is, it can’t convert if it never gets seen.

1. Subject Line

This is your first impression—and arguably the most tested email component.

What to test:

- Length: Short (under 40 characters) vs. long (up to 80+)

- Tone: Friendly, urgent, witty, formal—each appeals to different audiences

- Personalization: Including the recipient’s name, company, or behavior history

- Content style: Questions, numbers, how-to statements, curiosity hooks

- Clarity vs. curiosity: Direct offers (“20% off for you”) vs. intrigue (“This surprised us…”)

Why it matters: The subject line determines whether your audience even gives your message a chance. A great subject line earns attention. A weak one gets ignored or filtered.

2. Preheader Text

This secondary snippet supports the subject line and is often overlooked.

What to test:

- Continuation vs. contrast to the subject line

- Emotional language vs. factual reinforcement

- Including additional context or benefits

- Length and visibility on mobile vs. desktop

Why it matters: The preheader can be the deciding factor between a quick delete and a click—especially on mobile devices where it occupies more screen space.

3. Sender Name and Address

Trust influences open behavior just as much as curiosity.

What to test:

- Brand-only sender name (e.g., “BiziBusiness”) vs. personalized (“Colin at BiziBusiness”)

- Real person vs. department (e.g., “Support Team” vs. “Colin from Customer Success”)

- Friendly tone vs. official voice

Why it matters: The sender name can instantly inspire confidence—or skepticism. People open emails from senders they recognize and trust.

4. Send Timing and Cadence

When you send is almost as important as what you send.

What to test:

- Time of day (morning, afternoon, evening)

- Day of week (midweek vs. weekend)

- Frequency (weekly vs. biweekly vs. monthly)

- Send time by time zone or subscriber location

Why it matters: Timing can dramatically affect open rates. Audiences respond differently depending on when they receive your message. Use testing to identify the windows where your audience is most responsive.

Post-Open Elements: Maximizing Clicks and Conversions

Once a subscriber opens your email, your job shifts to holding attention and driving action. Here’s what to test post-open.

5. Layout and Design

Visual structure shapes readability, flow, and click behavior.

What to test:

- One-column (mobile-optimized) vs. multi-column layout

- Short-form (quick updates) vs. long-form (educational or story-based)

- Image-first vs. text-first hierarchy

- Button placement (top, mid, bottom of email)

- Visual pacing: whitespace, headers, dividers

Why it matters: Good design improves clarity and flow, guiding users to your CTA more effectively. Poor layout creates friction and drop-off.

6. Call-to-Action (CTA)

The CTA is the moment of truth. Testing here is about driving that critical click.

What to test:

- CTA text: Command-style (“Buy Now”) vs. value-driven (“See the Demo”)

- CTA style: Button vs. hyperlink

- CTA position: Top of email, after content, or repeated

- Number of CTAs: One focused ask vs. multiple optional links

- Color, shape, and size of the button

Why it matters: A weak CTA leaves readers with no clear next step. A strong one converts interest into action.

7. Copy Style and Tone

The voice and structure of your message can directly impact engagement.

What to test:

- Tone: Casual and conversational vs. formal and authoritative

- Style: Storytelling, instructional, persuasive, or data-driven

- Message length: Concise vs. detailed content

- Use of personalization: First name, product viewed, past activity

- Structure: Long paragraphs vs. bullet points and short blocks

Why it matters: Good copy builds trust and momentum. Test styles to see what resonates with your audience and leads them toward your CTA.

8. Offer Format and Framing

If your goal is conversion (e.g., purchases, sign-ups), how you present your offer matters just as much as the offer itself.

What to test:

- Discount type: “20% off” vs. “$10 off”

- Free trial vs. limited-time offer

- Scarcity vs. value framing: “Only 24 hours left” vs. “Best value of the year”

- Bonus items vs. price savings

- Lead magnets: Ebook vs. webinar vs. checklist

Why it matters: Framing affects perceived value. Subtle changes in offer structure can change how recipients respond—even when the underlying value stays the same.

Advanced Optimization Areas

9. Visual Assets

Test how images influence perception, clarity, and engagement.

What to test:

- Static images vs. animated GIFs

- Stock imagery vs. branded/custom visuals

- Face-forward photography vs. product-centric layouts

- Video thumbnail designs or play-button overlays

Why it matters: Images can communicate faster than words—but can also slow load time or confuse if misused.

10. Audience Segmentation

Send the same email to different segments and analyze how performance varies.

What to test:

- Demographic differences (age, role, industry)

- Behavior-based targeting (recent purchases, cart abandonment, product viewed)

- Engagement level (active vs. at-risk subscribers)

- Lifecycle stage (onboarding vs. retention vs. winback)

Why it matters: A message that performs poorly overall may work extremely well for the right audience. Segmentation unlocks relevance—and relevance drives results.

Start With Strategic Priorities

Don’t test everything at once. Focus on the elements most likely to impact your specific goal:

- Want higher open rates? Start with subject lines, preheaders, and send timing

- Want more clicks? Test layout, copy style, and CTA design

- Want more conversions? Focus on offers, audience targeting, and messaging tone

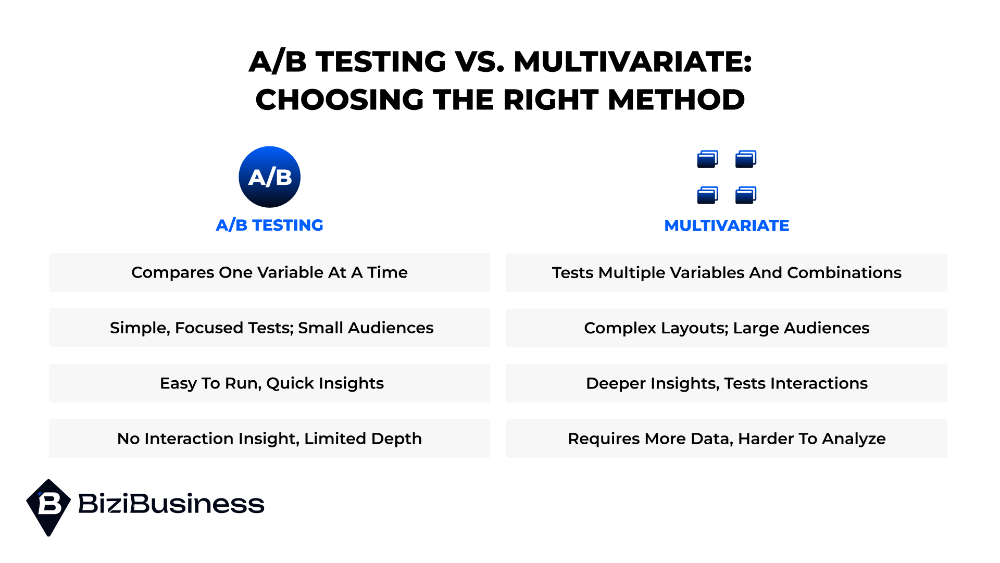

A/B Testing vs. Multivariate: Choosing The Right Method

Email optimization isn’t just about what you test—it’s about how you test. The two most common methods are A/B testing and multivariate testing. Each has a distinct purpose, level of complexity, and ideal use case.

Choosing the right testing method ensures your results are accurate, your insights are actionable, and your optimizations actually move the needle.

A/B Testing: Simple, Strategic, and Focused

What it is:

A/B testing (also known as split testing) compares two versions of a single variable to see which performs better.

Examples:

- Subject Line A vs. Subject Line B

- CTA button in red vs. blue

- Personalized greeting vs. generic opening

When to use it:

- You want a clear, focused result

- You have a smaller list size (under 100k)

- You’re testing a single hypothesis (e.g., “Does a question-based subject line increase opens?”)

Benefits:

- Simple to run and easy to understand

- Faster to execute and analyze

- Ideal for ongoing iterative improvements

Limitations:

- Only compares one variable at a time

- Can’t isolate interactions between variables

- Requires a statistically valid sample size to be meaningful

Multivariate Testing: Complex but Powerful

What it is:

Multivariate testing involves testing multiple variables at once to see how different combinations affect performance. You’re not just testing what works independently—you’re testing how elements work together.

Example:

Testing 2 subject lines × 2 header images × 2 CTA buttons = 8 total versions.

When to use it:

- You have a large enough list to support multiple variations

- You’re looking to optimize a complete design or layout

- You want to understand how variables interact (e.g., layout + copy + CTA)

Benefits:

- Offers deeper insight into how multiple factors influence results

- Helps identify the best combination of elements, not just individual winners

- More efficient than running multiple A/B tests back to back

Limitations:

- Requires significantly more data to reach statistical significance

- Results can be harder to interpret without the right tools

- Takes more time to build, monitor, and analyze

How to Decide Which Method to Use

| Situation | Use A/B | Use Multivariate |

|---|---|---|

| Testing a subject line or CTA copy | ✅ | |

| Optimizing a full email layout or template | ✅ | |

| Small to medium list size | ✅ | |

| Large list size and multiple variables | ✅ | |

| Need quick insights for a live campaign | ✅ | |

| Running exploratory, multi-factor testing | ✅ |

Pro Tip: Start Simple, Scale Smart

Most marketers benefit by starting with A/B testing—it’s easier to manage, delivers faster insights, and sets a baseline for performance. Once you’ve built confidence and have the volume to support it, multivariate testing can help fine-tune your highest-performing emails.

How to Set Up an Effective Email Test

Testing isn’t just about running variations—it’s about designing the right test, the right way, to get results you can trust. A poorly structured test can lead to false positives, wasted effort, or misleading conclusions.

Whether you’re optimizing subject lines or overhauling templates, here’s how to set up a test that deliverability real, reliable insight.

1. Start With a Clear Hypothesis

Every great test begins with a question:

“What do we believe will improve performance—and why?”

This is your hypothesis. It should be specific, measurable, and tied to a known business goal.

Example:

“We believe that using personalized subject lines will increase open rates because users are more likely to engage with messages that feel relevant to them.”

Avoid vague reasoning like “just to see what happens.” Without a hypothesis, you’re not testing—you’re guessing.

2. Identify One Variable to Test (at a Time)

Resist the temptation to test multiple things in one test unless you’re deliberately running a multivariate experiment. Changing subject line and CTA and layout in a single test makes it impossible to know what actually drove results.

Single-variable examples:

- “Does a question-based subject line outperform a statement?”

- “Will a red CTA button outperform a blue one?”

- “Does including a product image in the email body increase clicks?”

Why it matters: Isolating variables gives you clarity—and confidence in your next move.

3. Create a Strong Control and a Distinct Challenger

Your control is the existing version (what you usually send). Your challenger is the new version you believe might improve performance. The challenger should be meaningfully different—not just a slight tweak.

For example:

- Weak test: “Shop Now” vs. “Shop Today” (minimal difference)

- Strong test: “Shop Now” vs. “See This Week’s Best Deals” (different framing and intent)

Make your challenger bold enough to reveal whether the direction is worth pursuing.

4. Define Your Sample Size and Duration

A test is only as trustworthy as its data. Too small a sample or too short a timeline = skewed results.

Best practices:

- Use 10%–30% of your total list for A/B tests

- Aim for at least 1,000 recipients per variant (more is better)

- Let the test run for at least 24–72 hours depending on engagement velocity

- Avoid running tests over weekends or holidays unless you’re testing time-specific content

Tools like Optimizely, VWO, or your ESP’s built-in calculator can help determine a statistically significant sample size.

5. Choose the Right Success Metric

Pick one primary metric that aligns with the goal of your test. This keeps your analysis focused and prevents cherry-picking data to match your hopes.

Examples:

- For subject lines: open rate

- For CTAs or copy: click-through rate (CTR)

- For offers or segmentation: conversion rate or revenue per email sent

Track secondary metrics (like unsubscribe rate or spam complaints), but don’t let them muddy your test’s primary objective.

6. Document Everything

Great testing isn’t just about running experiments—it’s about building a system that learns and evolves.

Keep a simple log or spreadsheet with:

- The hypothesis

- Control and challenger details

- Audience and send time

- Sample size and test duration

- Primary metric and outcome

- Your takeaway and what you’ll do next

This becomes your optimization library—so you don’t repeat past mistakes or miss trends over time.

How to Analyze Results (Without Misleading Yourself)

Running a test is only half the job. The other half is making sense of the data—and knowing when you can confidently take action.

Testing without a clear framework for analysis can lead to false positives, overreactions, or wasted effort. Here’s how to analyze your email tests the right way, so you turn results into reliable improvements.

1. Wait Until You Reach Statistical Significance

It’s tempting to call a winner early—especially when one version pulls ahead fast. But performance often fluctuates during the first 12–24 hours. Declaring a result too soon can lead to false confidence.

What to do:

- Use a statistical significance calculator (many ESPs include one)

- Wait until you’ve hit your pre-determined sample size

- Don’t end the test based on intuition or impatience—let the data stabilize

Why it matters: Making decisions based on early spikes can lock you into suboptimal performance.

2. Focus on the Primary Metric You Set

Stick to the goal you defined before launching the test. If your goal was to increase open rate, don’t let a small bump in CTR or conversions distract you—especially if they weren’t statistically significant.

Avoid:

- Cherry-picking data points to validate what you wanted to work

- Shifting your interpretation once results are in

Why it matters: Moving the goalposts muddies learning and breaks your optimization process.

3. Consider Performance Over Time

Some email tests show strong early results, then fade. Others may gain traction later, especially if they’re part of a drip sequence or triggered flow.

For evergreen campaigns:

- Track results over 7–30 days if possible

- Look beyond opens and clicks to measure impact on long-term metrics (e.g., purchases, churn reduction, upsells)

Why it matters: A strong initial click rate is great—but sustained performance is what drives ROI.

4. Analyze Audience Segments Separately

Not all test results are universal. What works for new subscribers may flop with longtime customers. What resonates with B2C audiences might underperform in B2B.

Break down results by:

- Segment (e.g., recent signups vs. loyal buyers)

- Location or time zone

- Device (desktop vs. mobile)

Why it matters: A test may show a modest lift overall—but uncover a huge opportunity in a specific audience segment.

5. Consider Impact, Not Just Lift

A statistically significant lift doesn’t always mean a meaningful one. For example, a 1% increase in open rate sounds good—but if your base open rate is 10%, that’s just 0.1% more of your audience engaging.

Ask:

- How does this change impact revenue, signups, or retention?

- Is the winning version scalable across future campaigns?

- Is it worth implementing for the gain?

Why it matters: Your time and attention are valuable. Focus on optimizations that generate noticeable business impact—not just cosmetic wins.

6. Document and Share Learnings

Always record what worked, what didn’t, and what you plan to do next. A single test might not tell a full story—but patterns over time reveal powerful insights.

Use a testing log or database to track:

- Test goals and variables

- Performance by audience and campaign type

- Unexpected insights and anomalies

- Implementation status of winning versions

Why it matters: Documentation keeps your team aligned, avoids repeating failed ideas, and helps new team members learn faster.

Common Mistakes In Email Testing

Running email tests is easy. Running valid, insightful, and impactful email tests? That’s where most marketers stumble.

Below are the most common testing mistakes that dilute your results, waste valuable audience exposure, or lead to false conclusions—and what you can do to avoid them.

1. Testing Too Many Variables at Once

The mistake:

Making changes to multiple elements—subject line, header image, CTA, and layout—within a single test and expecting clear answers.

Why it fails:

When you test multiple variables simultaneously in a basic A/B test, you can’t isolate which one influenced performance. If your click-through rate goes up, was it the new CTA, the hero image, or both?

How to fix it:

- Use A/B testing for single-variable isolation

- Move to multivariate testing only when you have a large enough list and a clear testing matrix

- Break complex tests into sequential stages to avoid overwhelming your sample

Pro tip: One good variable test is more useful than five messy ones.

2. Declaring Winners Too Soon

The mistake:

Stopping the test after a few hours or sending to just a small portion of your list based on early returns.

Why it fails:

Initial spikes in performance often level off or even reverse. Ending a test prematurely skews your conclusions and can lead you to scale ineffective tactics.

How to fix it:

- Set minimum thresholds before starting: sample size, test duration, and statistical confidence

- Avoid calling a test before 24–48 hours, unless you’re testing real-time urgent sends (e.g., flash sales)

Pro tip: Trust the data over the impulse to act fast. Let the test mature before making a call.

3. Testing Without a Clear Hypothesis

The mistake:

Launching a test just to “see what happens,” without stating a goal or expectation.

Why it fails:

If you don’t know what success looks like, you can’t recognize it—or replicate it.

How to fix it:

- Always write down a hypothesis before running a test: “We believe [X change] will improve [Y metric] because [Z reason].”

- Tie every test to a clear business objective: higher opens, better conversions, increased engagement, etc.

Pro tip: The strength of your test depends on the clarity of your intent.

4. Drawing Conclusions From Inconclusive Data

The mistake:

Using small sample sizes or unbalanced test groups that don’t represent your audience—and then assuming your result is meaningful.

Why it fails:

Results from underpowered tests are often statistical noise, not insight. Acting on them can backfire.

How to fix it:

- Use calculators to determine statistical significance based on your list size and expected lift

- Avoid testing if your audience is too small (fewer than 1,000 recipients per version is risky)

- If needed, run the same test multiple times over smaller segments and look for consistent results

Pro tip: Don’t confuse “some data” with “enough data.”

5. Chasing Vanity Metrics Over Business Impact

The mistake:

Focusing tests on metrics like open rate without considering downstream effects like conversions, revenue per email, or customer lifetime value.

Why it fails:

An email that gets more opens but fewer conversions may look like a win—until you realize it’s costing you revenue.

How to fix it:

- Choose test metrics that align with your campaign’s true goal

- Track full-funnel performance, not just top-of-funnel indicators

- Layer business KPIs (e.g., purchases, upgrades, subscriptions) into your post-test review

Pro tip: Treat testing like strategy, not surface-level tuning.

6. Failing to Apply the Results

The mistake:

You ran a great test. You found a clear winner. But no one implemented it—or you forgot to apply it to future emails.

Why it fails:

Unapplied tests create data with no direction. They cost time, waste insights, and slow momentum.

How to fix it:

- Build a simple test documentation system (spreadsheet, Notion board, or shared doc)

- Assign ownership for implementing results and updating templates

- Regularly revisit and scale winners cross-channel integration campaigns, automations & workflows.

Pro tip: A test isn’t complete until its results are used.

7. Assuming Results Are Permanent

The mistake:

A test from six months ago worked—so you keep repeating the tactic without retesting or validating.

Why it fails:

What worked last quarter might not work today. Audience behavior, inbox environments, and market dynamics shift constantly.

How to fix it:

- Set reminders to retest core assumptions (subject lines, send times, template structure)

- Track performance over time to spot decay in test winners

- Build a culture of continuous improvement, not “set it and forget it”

Pro tip: Don’t let past wins become future blind spots.

Optimization Frameworks You Can Steal

Testing without a system is just guesswork in disguise. To drive consistent, compounding improvements, your email program needs repeatable frameworks—strategic processes that turn isolated tests into ongoing learning and optimization.

Here are four proven frameworks you can adopt and adapt to fit your team, tools and platforms.

1. The Test → Learn → Scale Loop

This is the foundational feedback loop for any high-performing email strategy. It’s built on three simple steps:

- Test: Launch a focused, hypothesis-driven experiment

- Learn: Analyze the results, extract insights, and document findings

- Scale: Apply the learning to broader campaigns, sequences, or segments

Why it works:

Instead of chasing wins in isolation, this loop creates a knowledge base that grows stronger over time. Each successful test becomes a play you can reuse—and every failure teaches you something valuable.

How to implement it:

- Keep a shared testing doc or optimization board

- Set time every quarter to review past learnings and update your playbook

- Tag winning campaigns in your ESP so you can easily clone and scale them

2. The 80/20 Optimization Strategy

Most of your email impact will come from a few core levers. This framework helps you identify and focus on those areas first.

What to focus on:

- Top 20% of campaigns that drive 80% of revenue

- Highest-traffic automations (welcome series, cart abandonment, re-engagement)

- Key conversion points: subject line → click → action

Why it works:

You don’t have to optimize everything to see major gains. Prioritize the campaigns and content that have the biggest potential upside.

How to implement it:

- Run a revenue or engagement audit on your last 90 days of email

- Highlight your top 5 revenue-generating or highest-volume sends

- Run 1 test per month in this group and apply learnings to future sends

3. The Monthly Testing Cadence

Optimization is often derailed by lack of structure. This simple rhythm makes it a habit.

Cadence example:

- Week 1: Plan test and build variants

- Week 2: Launch test

- Week 3: Analyze results

- Week 4: Apply learning + prep next test

Why it works:

A fixed cadence ensures testing doesn’t get deprioritized. It also gives enough breathing room for thoughtful analysis and real performance changes.

How to implement it:

- Block a recurring monthly time slot for email optimization

- Tie each month’s test to your current business goal (e.g., boost demo signups, improve upsell rates)

- Rotate testing focus: subject lines one month, offer framing the next, layout the following

4. The Repurpose + Refine Formula

Not every test needs to start from scratch. This framework helps you take proven winners and evolve them for new audiences or campaigns.

Steps:

- Identify a high-performing email

- Repurpose the core idea (layout, CTA style, offer) in a new campaign

- Run a refinement test (change 1–2 elements to fit context or audience)

- Repeat and document learnings

Why it works:

It builds on success while still driving iteration. You save time and reduce risk—because you’re never starting with a cold guess.

How to implement it:

- Tag top performers in your ESP dashboard

- Create a “Winning Email Vault” in your doc or design system

- Pull from this library any time you’re launching a new series or promotion

Bonus Tip: Build a Testing Culture, Not Just a Workflow

Frameworks work best when your entire team buys into the mindset. Share results across departments. Encourage healthy curiosity. Reward insights, not just wins.

Because in email optimization, the smartest strategy isn’t knowing all the answers—it’s building a system that keeps finding them.

Better Emails Are Built, Not Hoped For

Email testing isn’t a trend. It’s a discipline. The most effective marketers don’t rely on hunches or last year’s best practices—they build better emails through data, structure, and iteration.

When you embrace testing as a core function—not a side project—you shift your entire email design from reactive to proactive. You move from hoping your audience engages… to knowing what works, why it works, and how to do it again.

Optimization doesn’t require a massive team or expensive tech. It just requires:

- A focused hypothesis

- A clear success metric

- A willingness to learn—even from failed tests

- And a process to capture and apply what you’ve learned

Every email is a chance to improve. And when you build a system around that idea, your results compound.

So test smart. Test often. And treat every send as an opportunity—not just to get better results, but to get smarter at getting those results.

Subscribe to Newsletter

Unlock your creativity and stay up to date on marketing tips